During the pandemic, a third of people in the UK reported an increase in their trust in science, we recently found. But 7% said it had decreased. Why is there such a variety in the answers?

For many years, it was thought that the main reason some people reject science is a simple lack of knowledge and fear of the unknown. Consistent with this, many surveys have reported that attitudes toward science are more positive among those who know more about textbook science.

But if that was indeed the main problem, the remedy would be simple: tell people the facts. However, this strategy, which dominated science communication for much of the latter part of the 20th century, failed on multiple levels.

In controlled experiments, it was found that giving people attitudes did not change giving scientific information. And in the UK, scientific messages about genetically modified technologies have even gone backwards.

The failure of the information-based strategy can lead to people discounting or avoiding information if it conflicts with their beliefs – also known as confirmation bias. However, another problem is that some people do not trust the message or the messenger. This means that a lack of confidence in science is not necessarily the result of a lack of knowledge alone, but of a lack of trust.

With this in mind, many research teams including ours decided to find out why some people trust science and some people don’t. One strong predictor stood out for people who distrusted science during the pandemic: distrusting science in the first place.

Understand distrust

Recent evidence shows that people who reject or distrust science are not particularly aware of it, but more importantly, they generally believe they understand science.

This finding, over the past five years, has been found repeatedly in studies investigating attitudes on a wide range of scientific issues, including vaccines and GM foods. It still is, we found, even when there is no question about a particular technology. However, they may not apply to certain political sciences, such as climate change.

Recent work has also found that overconfident people who don’t like science believe that they have the wrong view and therefore many others agree with them.

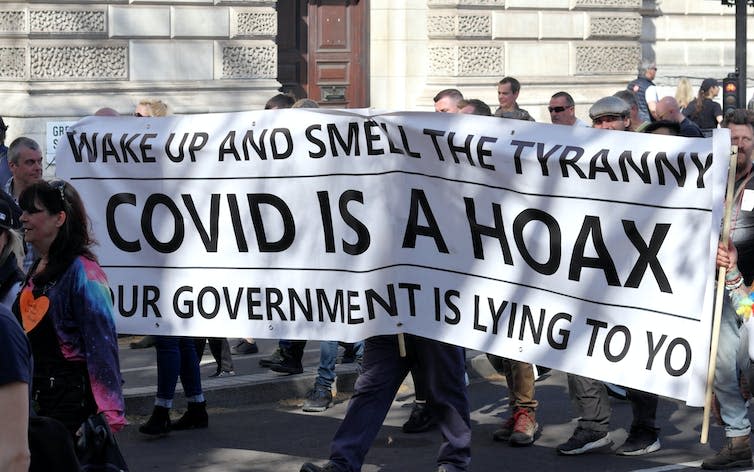

Other evidence suggests that some of those who reject science also derive psychological satisfaction from framing their alternative explanations in a way that cannot be rationalized. Conspiracy theories are often of a similar nature – be it microchips in vaccines or COVID-12 caused by 5G radiation.

But the whole point of science is to examine and test theories that can be proven wrong – theories that scientists call plausible. On the other hand, conspiracy theorists often reject information that does not fit their preferred explanation by, as a last resort, questioning the messenger’s motives.

When someone who trusts the scientific method debates with someone who doesn’t, he/she is playing by different rules of engagement. This means that it is difficult to convince skeptics that they could be wrong.

Finding solutions

So what can we do with this new understanding of attitudes towards science?

The messenger is every bit as important as the message. Our work confirms many previous surveys which show that politicians, for example, are not trusted to communicate science, but university professors are. This should be kept in mind.

The fact that some people hold negative attitudes, bolstered by a misguided belief that many others agree with them, suggests an additional potential strategy: tell people what the consensus position is. The advertising industry came first. Statements such as “eight out of ten cat owners say their pet prefers this brand of cat food” are popular.

A recent meta-analysis of 43 studies that investigated this strategy (these were “randomized controlled trials” – the gold standard in scientific testing) found support for this approach to changing belief in scientific facts. In specifying the consensus, it implicitly clarifies what constitutes misinformation or unsupported ideas, which means it would also address the problem that half the people do not know what is true due to the proliferation of conflicting evidence.

Preparing people for the possibility of misinformation is a complementary approach. Misinformation spreads quickly and, unfortunately, every effort to debunk it only serves to make the misinformation more visible. The scientists call this the “sustained impact effect”. Genies are never put back in bottles. Better to anticipate protests, or inoculate people against the strategies used to promote misinformation. This is called “pre-bunking”, rather than debunking.

However, different strategies may be needed in different contexts. It doesn’t matter whether the science in question is based on consensus among experts, such as climate change, or new cutting-edge research into the unknown, such as an entirely new virus. For the latter, it is a good way to explain what we know, what we don’t know and what we are doing – and to emphasize the provisional results.

By emphasizing uncertainty in rapidly changing fields we can prevent the objection that the sender of a message cannot be trusted because they said one thing one day and something else later.

But no strategy is likely to be 100% effective. We found that even with widely discussed PCR tests for COVID, 30% of the public said they had never heard of PCR.

A common quandary for many science communicators may be that it appeals to those already engaged in science. Maybe that’s why you’re reading this.

That said, the new science of communication suggests that trying to reach out to those who are absent is well worth the effort.

This article from The Conversation is republished under a Creative Commons license. Read the original article.

Laurence D. Hurst receives funding from the Evolution Education Trust. He is affiliated with The Genetics Society. Dr Cristina Fonseca contributed to this article as well as some of the research cited funded by The Genetics Society.