Many ways have been proposed to try to limit artificial intelligence (AI), because of its potential for harm in society, as well as its benefits.

For example, the EU AI Act places greater restrictions on systems based on whether they fall into the category of general purpose and generative AI or are considered limited risk, high risk or unacceptable risk.

This is a new and bold approach to mitigating any negative impact. But what if we could adapt some existing tools? One well-known model is the licensing of software that could be adapted to meet the challenges of advanced AI systems.

Responsible open AI licenses (OpenRails) could be part of this answer. AI licensed with OpenRail is similar to open source software. A developer may release their system publicly under the license. This means that anyone is allowed to use, adapt and redistribute what was originally licensed.

The difference with OpenRail is the addition of conditions for the responsible use of AI. These include not breaking the law, impersonating people without consent or discriminating against people.

Along with the mandatory conditions, OpenRails can be adapted to take into account other conditions directly related to the specific technology. For example, if an AI was created to categorize apples, the developer may specify that it should not be used to categorize oranges, as it would be irresponsible to do so.

The reason this model can be helpful is that many AI technologies are so general, they could be used for many things. It is very difficult to predict the terrible ways in which they could be exploited.

So this model allows developers to help push open innovation forward while at the same time reducing the risk of their ideas being used in irresponsible ways.

Open but responsible

In contrast, proprietary licenses are more restrictive on how software can be used and adapted. They are designed to protect the interests of creators and investors and have helped tech giants like Microsoft build huge empires by charging for access to their systems.

Because of its wide range, it could be argued that AI requires a different, more nuanced approach that could promote the openness that drives progress. Many large firms currently operate proprietary – closed – AI systems. But this could change, as there are several examples of companies using an open source approach.

Meta’s AI generation system, Llama-v2 and the Stable Diffusion image generator are open source. French AI startup Mistral, founded in 2023 and now valued at US$2 billion (£1.6 billion), is soon to openly release its latest model, which is rumored to have performance comparable to GPT-4 (the model behind Chat GPT).

However, openness must be tempered with a sense of responsibility to society, given the potential risks associated with AI. These include the possibility of algorithms discriminating against people, replacing jobs and even creating existential threats to humanity.

We should also think about the more humdrum and everyday uses of AI. Technology will become more and more part of our societal infrastructure, an integral part of how we access information, form opinions and express ourselves culturally.

Such a universally important technology creates its own kind of risk, separate from the robot apocalypse, but it’s still worth considering.

One way to do this is to contrast what AI may do in the future, with what free speech does now. The free sharing of ideas is not only critical to upholding democratic values but is also the engine of culture. It facilitates innovation, encourages diversity and allows us to distinguish truth from falsehood.

The AI models being developed today are likely to be the main way to access information. They will shape what we say, what we see, what we hear and, by extension, how we think.

In other words, they will shape our culture in much the same way that free speech does. For this reason, there is a good argument that the results of AI innovation should be free, shared and open. And, as it happens, most of it already is.

Limits are needed

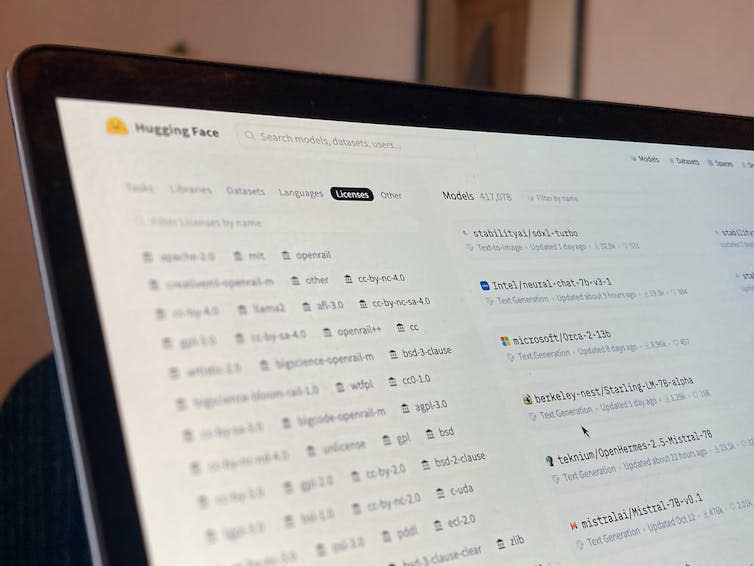

On the HuggingFace platform, the world’s largest AI developer hub, over 81,000 models are currently published using “permitted open source” licenses. Just as the right to free speech benefits society, this open sharing of AI is an engine of progress.

However, free speech has necessary ethical and legal limits. False claims that harm others or expressions of hatred based on ethnicity, religion or disability are widely accepted. What OpenRails does is provide a way for innovators to find this balance in the field of AI innovation.

For example, deep learning technology is applied in many worthwhile fields, but it also underpins deepfake videos. The developers probably didn’t want their work to be used to spread misinformation or create non-consensual pornography.

OpenRail would provide them with the ability to share their work with restrictions that would, for example, prevent anything that would violate the law, cause harm or result in discrimination.

Legally enforceable

Can OpenRAIL licenses help us avoid the inevitable ethical dilemmas of AI? Licensing cannot go that far, and one limitation is that licenses are only as good as the ability to enforce them.

At present, enforcement is likely to be similar to that for music copying and software piracy with cease and desist letters being sent with the expectation of possible court action. While such measures do not stop piracy, they do not encourage it.

Despite limitations there are many practical advantages, licenses are well understood by the technology community, are easily scalable, and can be adopted with minimal effort. Developers have recognized this, and to date, more than 35,000 models hosted on HuggingFace have adopted OpenRails.

Ironically given the company’s name, OpenAI – the company behind ChatGPT – does not openly license its most powerful AI models. Instead, with its flagship language models, the company operates a closed approach that gives access to the AI to anyone willing to pay, and prevents others from building on or adapting the underlying technology.

As with the free speech analogy, the freedom to share AI openly should be expensive, but perhaps not for us. While not a complete cure, licensing-based approaches such as OpenRail seem like a promising piece of the puzzle.

This article from The Conversation is republished under a Creative Commons license. Read the original article.

Joseph’s research is currently supported by Design Research Works (https://designresearch.works) under United Kingdom Research and Innovation (UKRI) grant reference MR/T019220/1. They are both members of the steering committee of the Responsible AI Licensing initiative (https://www.licenses.ai/).

Jesse’s research is supported by Design Research Works (https://designresearch.works) under United Kingdom Research and Innovation (UKRI) grant reference MR/T019220/1. They are both members of the steering committee of the Responsible AI Licensing initiative (https://www.licenses.ai/).