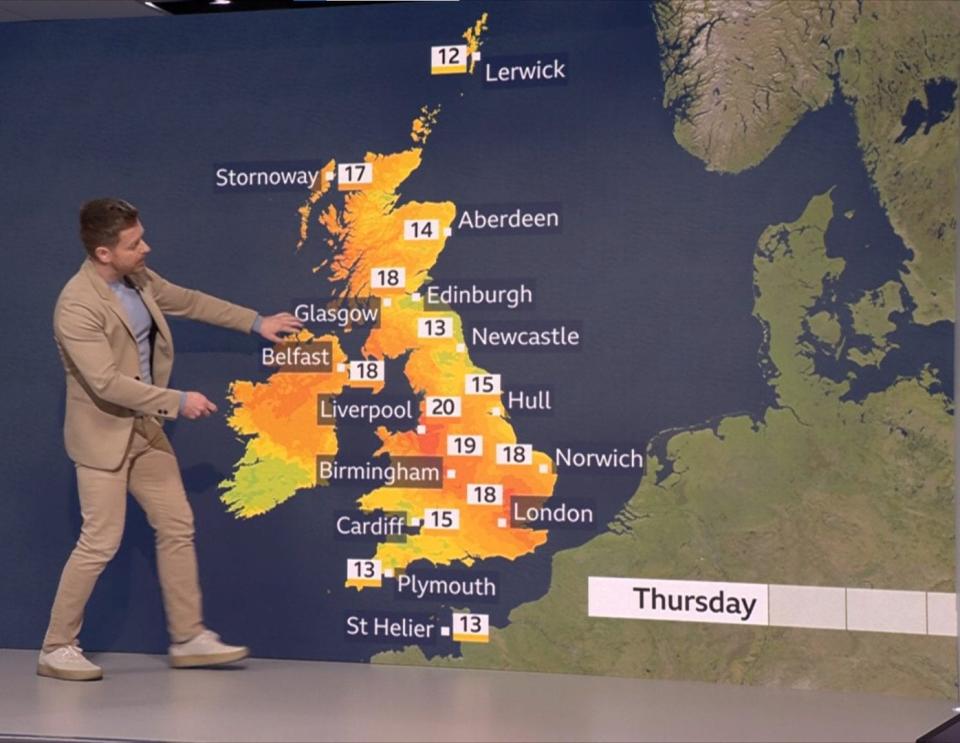

When the BBC’s weather map turned yellow this week, and it looked like a dose of Mediterranean sun was on its way just in time for the long weekend, viewers were delighted to learn that their efforts would pay off Hatching in 15C less than tropical. “I think it’s very misleading,” says 43-year-old florist Vicky Laffey. “If you look at a map that’s full of orange and yellow, that country looks like it’s in a heat wave and it gives people the wrong impression.”

But there seems to be an innocent explanation – although the corporation recently had to defend itself against the suggestion that it increased the intensity of map colors to raise the alarm about rising temperatures.

“The colors used now range from blue for the coldest temperatures to red for the hottest temperatures as these colors are easier to see if you are color blind,” explains a BBC spokesperson.

However, hell hath no fury like a Brit who has already started unpacking the garden furniture. This week’s saga is not surprising, perhaps, as the weather forecast begins without success.

The Meteorological Office was founded in 1854 by Captain Robert FitzRoy, Vice-Admiral of the Navy, who was all too familiar with the effects of weather on sea life. In an effort to recruit sailors, daily weather reports were sent to him in London from places off the coast of England and Ireland via new telegraph technology. His role was to try to make sense of the data, pioneering the science we now know as meteorology. As with any emerging system, however, mistakes occurred and his weather reports, as well as his depression, led to his own death in 1865 due to public criticism.

Later that year, the Royal Society – which would manage the Meteorological Office for 40 years after FitzRoy’s death – ruled that “we can find no evidence that any competent meteorologist believes that science is in a position to at the viewer’s current ability. to indicate the weather that will be felt in the next forty-eight hours from day to day.” 90 years later, in 1955, the commander of the Dunstable Central Forecast Station said that “any forecast issued more than 24 hours in advance can be guaranteed little accuracy”.

Knowledge of the weather – and, most importantly, of what is in store for the coming days and weeks – has been occupying space in the human mind since the beginning of time. Of course, the success of the crops depends a lot on water and sun, although only the right amount and at the best time. Occasional droughts, floods and locusts crop up in many biblical stories and the Great Famine of 1315-1317, caused largely by a climatic anomaly that brought cooler temperatures and heavy rains, sent up to 12 percent of the population of the Northern Europe.

And let’s not forget how many military efforts were destroyed by bad winds. For example, in the Spanish Armada, most of the 130 flotillas against the fleets were laid on the rocks on the west coast of Scotland and Ireland. The D-Day landings were delayed by 24 hours due to strong winds and unfavorable sea conditions. You can’t help but feel for Group Capt James Stagg, Eisenhower’s chief meteorologist, who he and his team were tasked with coming up with accurate forecasts. But despite their efforts, the weather was still difficult to predict and D-Day – June 6 – was far from ideal conditions for landing more than 130,000 men on hostile beaches.

Those adventures, at the mercy of wind and tide, feel far from where we are now, where rain or sunshine can be chalked up to the moment. The UK no longer uses a permanently staffed observatory at the summit of Ben Nevis, a central monitoring tool until 1904; and coastal trees do not raise cones to warn of incoming winds. The first major change in weather mapping was the introduction in 1922 of Numerical Weather Prediction, using mathematical models of current atmospheric and oceanic conditions.

It took so much time to use these huge data sets, however, that by the time it was worked out, the weather had already happened. It was only with the introduction of computer simulation in the 1950s that all this information could be processed in a timely manner, and the first computer-driven reports appeared in the second half of the 1960s. It was a big step forward from the “Red Sky this morning, Shepherd Warning” level of weather forecast.

“Have we gotten better at learning to read the signs in the sky? In a word, no,” says Tristan Gooley, author of books including The Secret World of Time. Over the past century, “the way weather has been studied and our understanding of it has been based on better monitoring and modeling of the atmosphere. But it’s usually in a very macro way,” he explains. “Weather is something that is happening over hundreds of miles, but just 100 years ago it was something that was happening over hundreds of yards.”

Although we have moved on from extrapolating meaning from cloud formations and the color of sunsets, there have been some relatively modern events that have clearly demonstrated what an imprecise science weather forecasting is. Consider Michael Fish’s now infamous comment, “Earlier today, apparently, a woman called the BBC and said she’d heard a hurricane was on the way. Well, if you’re watching, don’t worry, it’s not!”, on 15 October 1987, and was quickly followed by a £1.5 billion repair bill for the damage caused.

Since 2016, the Met Office has used the Cray XC40 supercomputer, which it calls “one of the most powerful in the world, dedicated to weather and climate forecasting”. About 15 times more powerful than its predecessor, the Cray XC40 aggregates 215 billion weather observations around the world every day. These are then fed into an atmospheric model containing millions of lines of code that generate forecasts.

Douglas Parker, professor of meteorology at the University of Leeds, says that while British forecasts were notoriously unreliable in the 1970s and 1980s, things have changed. Now, “you meet ordinary people painting a house, or builders, who will use the forecast [and] rely on it on a daily basis,” he says. From agriculture to shipping, aviation and power, the country’s GDP – and how we get our food and goods and how we keep the lights on – depends on the accuracy of forecast data.

So is it vulnerable to cyber attack because of its importance? “I think that’s always possible, and I’m sure that’s a risk,” Parker replied. “[But] security at the Met Office in the UK is extremely high due to their responsibility for vital industries.”

He describes the one-day forecast as “super accurate” (the Met Office says its four-day forecast is as accurate as the one-day forecast 30 years ago). But he knows that blind spots remain. Within a kilometer – a geographical area that would fall under the same forecast – there can be significant variation based on whether you are in the field or on the street, on a hill or in a valley. Fog is also a big challenge, according to Parker. “Clouds and ice have very complex physics, and actually trying to put those into equations in a model”, he says, is far from the precision currently possible for other types of weather.

I suspect most of us like to track the 14-day forecast before we go, but is that of much use? Parker doesn’t say: “If you go up to five days, well, we certainly have less statistical confidence sometimes… If you go up to 10 days, it’s just an illustration.”

For Gooley, knowing the ground is the best way to get an accurate forecast. “If the world’s best meteorologists were to borrow a hundred of the world’s most powerful computers, they would still have a hard time working out exactly where tomorrow’s predicted shower will be,” he says. “A man sensitive to his landscape is denied the powers of understanding of machines.”

That won’t stop scientists from refining their mapping tools – nor will the advent of AI be used for the same purposes. In the 1970s, before supercomputers, forecasters would use past weather patterns to make forecasts. “If you do it by hand, like people have been doing, it’s actually very inaccurate,” says Gooley (because there wasn’t enough data to go on at the time).

Now, however, the ability of algorithms to “make a pattern from past events” suddenly becomes very effective, and that has been a revolution in the last few years,” he says. “When you train them, they can be very fast.” For now, though, those models are no more skilled than our current ones (“and probably a little less skilled,” according to Parker). Will we be able to get a better – or at least, less dodgy – forecast in a few years? “It can’t be ruled out,” he says. “No one knows what will come.”