A room computer with a new type of circuit, the Perceptron, was introduced to the world in 1958 in a short news story buried deep in the New York Times. The story said that the US Navy was saying that the Perceptron would lead to machines “that can walk, talk, see, write, reproduce itself and be aware of its existence.”

More than six decades later, similar claims are being made for current artificial intelligence. So what has changed in the intervening years? In some ways, not much.

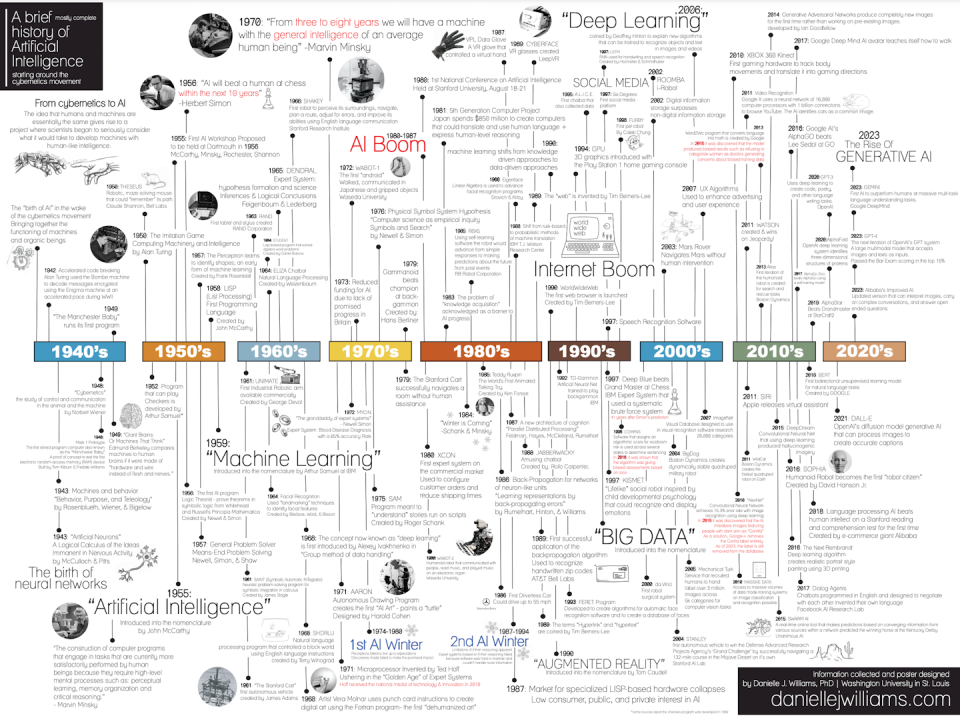

The field of artificial intelligence has been going through a boom-and-bust cycle since its early days. Now, with the field in yet another boom, many in the tech industry seem to have forgotten the failures of the past – and the reasons for them. While hope inspires progress, history is worth paying attention to.

It could be argued that the Perceptron, invented by Frank Rosenblatt, laid the foundation for AI. The analog electronic computer was a learning machine designed to predict whether an image fell into one of two categories. This revolutionary machine was filled with wires that physically connected different components together. Today’s artificial neural networks that underpin familiar AI like ChatGPT and DALL-E are software versions of the Perceptron, but with much larger layers, nodes, and connections.

Like today’s machine learning, if the Perceptron gave the wrong answer, it would change its connections so that it could better predict what will happen next time. Modern AI systems work in a similar way. Using a prediction-based format, large language models, or LLMs, can produce impressive long-form text-based responses and link images to text to produce new images based on clues. These systems get better and better as they interact more with users.

the boom and bust of AI

In the decade or so after Rosenblatt unveiled the Mark I Perceptron, experts such as Marvin Minsky claimed that by the mid-to-late 1970s the world would have “a machine with the general intelligence of an ordinary human.” But despite some success, human intelligence was nowhere to be found.

It quickly became clear that the AI systems knew nothing about their content. Without the appropriate background and contextual knowledge, it is almost impossible to accurately resolve ambiguities present in ordinary language – a task that humans do with ease. The first AI hit “winter,” or a period of disillusionment, in 1974 after the Perceptron sensor malfunctioned.

However, by 1980, AI was back in business, and the first official AI boom was in full swing. New expert systems, AIs, were designed to solve problems in specific areas of knowledge, which could recognize objects and diagnose diseases from observable data. There were programs that could make complex inferences from simple stories, the first driverless car was ready to hit the road, and robots that could read and play music performed for a live audience.

But it wasn’t long before the same problems fueled excitement again. In 1987, the second AI winter hit. Expert systems were failing because they could not handle new information.

The 1990s changed the way experts approached problems in AI. Although the second winter thaw did not ultimately lead to an official boom, significant changes did occur in AI. Researchers have been tackling the knowledge acquisition problem with data-driven approaches to machine learning that have revolutionized the way AI acquires knowledge.

This time also marked a return to the neural-network-style perceptron, but this version was much more complex, dynamic and, most importantly, digital. The return of the neural network, along with the invention of the web browser and an increase in computing power, made it easier to collect images, mine data and distribute datasets for machine learning tasks.

Informative abstentions

Fast forward to today and confidence in AI progress has begun to repeat the promises made nearly 60 years ago. The term “artificial general intelligence” is used to describe the activities of LLMs such as those that power AI chat bots like ChatGPT. Artificial general intelligence, or AGI, describes a machine with human-like intelligence, meaning that the machine would be self-aware, able to solve problems, learn, plan for the future and possibly be conscious.

Just as Rosenblatt thought his Perceptron was the basis for a conscious, humanoid machine, so do some contemporary AI theorists about today’s artificial neural networks. In 2023, Microsoft published a paper stating that “GPT-4 performance is remarkably close to human-level performance.”

But before claiming that LLMs are displaying human-level intelligence, it might help to consider the cyclical nature of AI progress. Many of the same problems with earlier iterations of AI are still present today. The difference is how those problems are manifested.

For example, the problem of knowledge exists to this day. ChatGPT constantly struggles to respond to idioms, metaphors, rhetorical questions and sarcasm – unique forms of language that transcend grammatical connections and instead require understanding the meaning of words based on context.

Artificial neural networks can, with remarkable accuracy, pick out objects in complex scenes. But give an AI a picture of a school bus lying on its side and 97% of the time it will confidently say it’s a snow tree.

Lessons to heed

In fact, it turns out that it’s quite easy to fool AI in ways that humans immediately recognized. I think it’s a thought worth taking seriously given how things have gone in the past.

Today’s AI looks very different than AI once did, but the problems of the past remain. As the saying goes: History may not repeat itself, but it often rhymes.

This article is republished from The Conversation, a non-profit, independent news organization that brings you reliable facts and analysis to help you make sense of our complex world. Written by: Danielle Williams, Arts and Sciences at Washington University in St. Louis

Read more:

Danielle Williams does not work for, consult with, own shares in, or receive funding from any company or organization that would benefit from this article, and does not she disclosed any relevant connections beyond their academic appointment.