AI has uses. Although it’s tempting to think of AI in terms of chatbots, image generators and maybe the thing that could end us all if we’re not careful, big tech companies are busy adding AI and machine learning optimization implemented in multiple aspects of their business, to integrate what used to be a marginal concept into the processes that create the products you may already have.

I recently had the opportunity to chat with the President of Intel India and VP & Head of Sustainability of the Client Computing Group, Gokul Subramaniam, in a wide-ranging discussion about Intel’s sustainability goals, product lifecycles and more.

I took the opportunity to ask how Intel uses AI and machine learning models for efficiency within its products, and whether AI has been used to optimize the process.

“We have AI in engineering as a big area of focus, starting from the front end of our silicon design at the RTL level, to the back end, which has essentially empowered the silicon and led it to production readiness. And then in our software development and debugging, we use AI as well as our manufacturing use, and to test how we use AI.

“We also use AI when it comes to a lot of the telemetry data that we collect to figure out what decisions we can make in terms of usage and things like that. So it’s a big area of focus across the lifecycle, design front-end silicon, back-end, software development, all the way to manufacturing.”

So it seems, according to Intel, that AI is already involved in a large number of aspects of chip design and production. However, since our discussion was focused on Intel’s goals towards sustainability, I also took the opportunity to ask him about his views on the scalability of AI, and the sustainability of the increased power demands that come with it.

“One of the things that Intel believes in is the AI continuum, from the cloud data center, to the edge of the network and the computer. Now, what that means is, it’s an AI continuum, it’s not one size fits all, it can’t be you have one or one monolithic view of computing There is a variety of needs in the AI continuum, the large and very large models, to the small and nimble models that can be in the devices on the edge.

“So it’s everything from high-performance computing, like Argonne labs that are 10s of 1000s of servers, to start-up, smaller companies, that might only need a few Xeons or maybe Xeons and a few GPUs. And as so we have this multivariate calculation. , that allows people to be able to do that to fit their power cover and calculate gains.”

While these are noble goals, it’s been hard to ignore the recent headlines surrounding concerns about the sustainability of the future of AI that often involves massive amounts of processing power, and actual amounts of power that can be staggering.

“AI is new, I don’t think anyone has cracked the code,” says Subramaniam. “What that means for sustainability, it’s going to be a journey that we take and learn … as long as we’re very focused on power efficiency and allowing our customers to build with the technologies that we bring to them outside of silicon, that’s where it will work.”

While data centers like Argonne National Laboratory and its Aurora Exascale Supercomputer – built on Xeon CPU series and Data Center GPU Max – are likely to draw huge amounts of power as well, the confirmation is that Intel sees AI workloads overall distributed over various aspects. Types of computing for different purposes make for an interesting distinction.

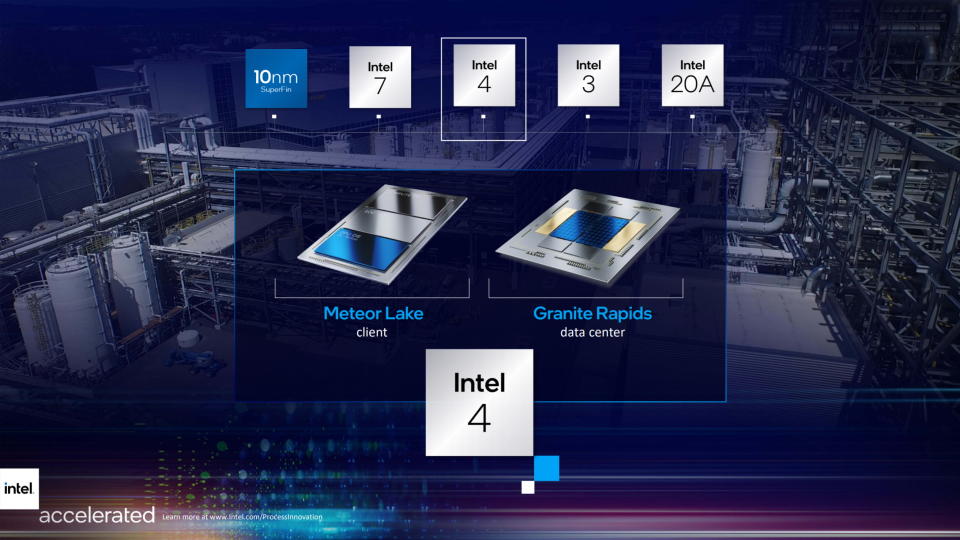

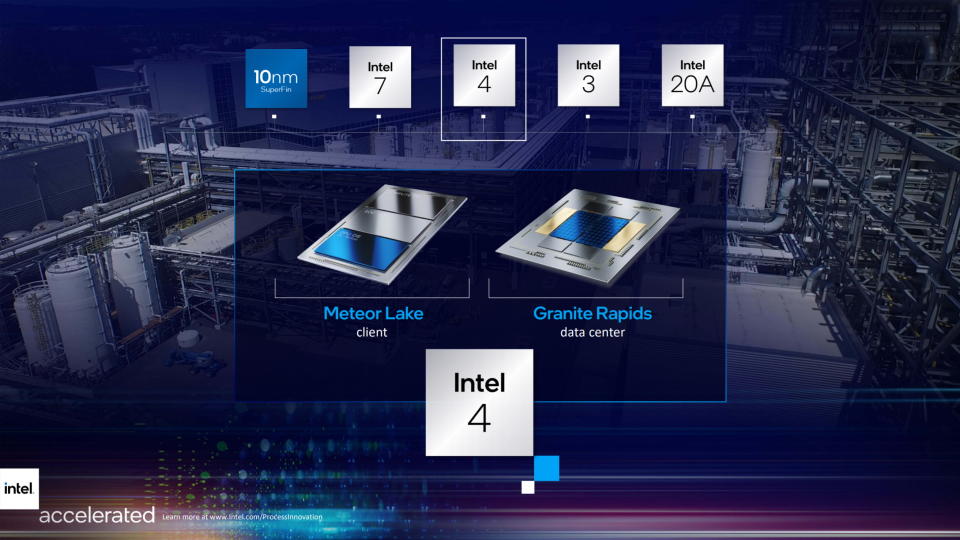

With the rise of consumer chips containing NPUs (neural processing units) specifically designed for AI processing, first seen in Intel’s Meteor Lake mobile CPUs and expected to also appear in the Arrow Lake desktop CPUs to be announced still, it seems that the focus is not. just on massive amounts of data center AI computing power, but AI processing will spread across chips in the future as a whole.

Your next upgrade

Best CPU for games: The top chips from Intel and AMD.

The best gaming motherboard: The right boards.

Best graphics card: Your perfect pixel crusher is waiting.

Best SSD for gaming: Get into the game ahead of the rest.

Due to the rise of the “AI PC”, it seems that the chip industry is very much following the future of AI, although there is an argument to be made that any computer with a modern GPU is already equipped for AI processing. The current definition of PC AI, however, seems to range from any computer delivering more than 45 TOP from a dedicated NPU, to having the appropriate sticker on your keyboard.

Regardless, it’s clearly turtles on the way down for Intel it seems, or in this case, AI from top to bottom. It seems likely that your next CPU, or maybe even the one you’re currently using, has already felt the touch of artificial intelligence, at least if it’s an Intel unit.