-

Experts suggest that Generative AI could improve decision-making in wargaming.

-

Wargaming faces issues of cost, transparency and accessibility, which AI could help address.

-

Despite the potential benefits of AI, there are also limitations that users must keep in mind.

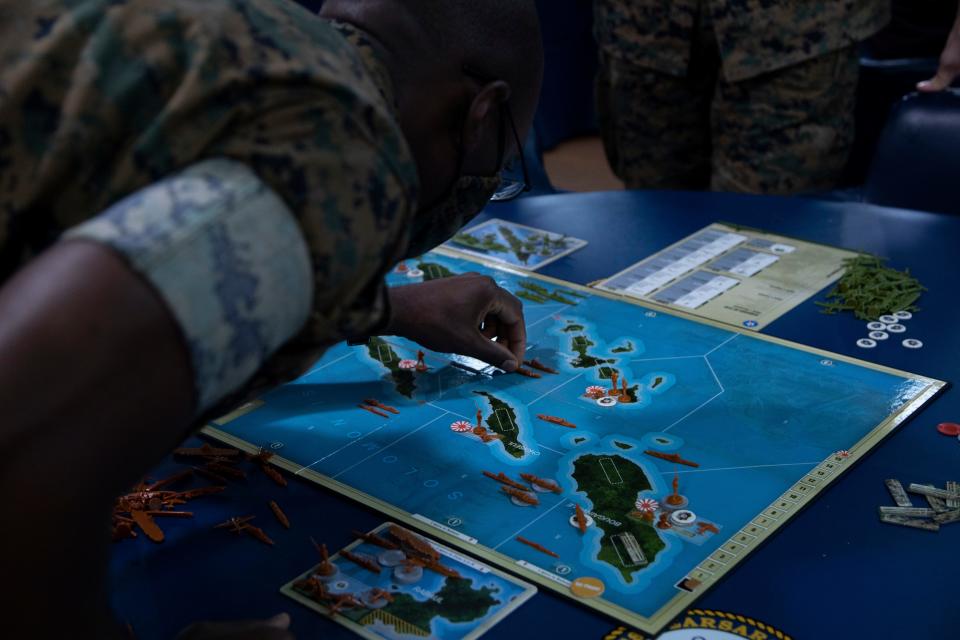

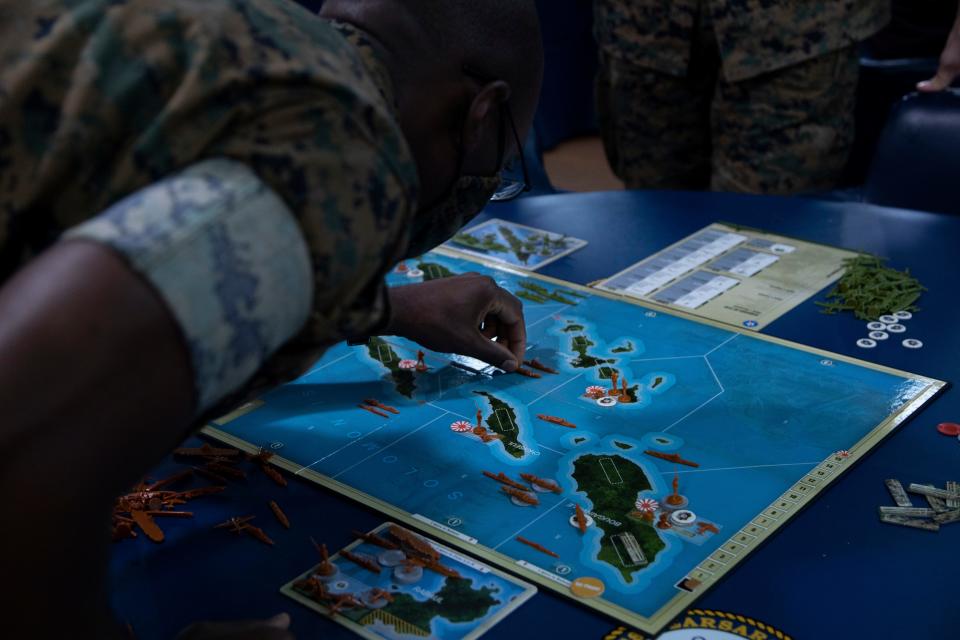

The way militaries prepare for and fight future wars could be fundamentally changed as more machines learn to think through complex situations at both the tactical and strategic levels.

By introducing artificial intelligence generation into gaming, extensive simulations used by experts, policy makers, and the military to develop strategy in the event of war, it has the potential to improve the decision-making process for participants.

It’s something that can be seen in fiction writing on imagined future wars but it’s also being looked at right now.

AI “can shape the wargames and really the whole future of war,” Yasir Atalan, associate data fellow at the Center for Strategic and International Studies, told Business Insider. “It will allow policy makers, planners to see what are the weaknesses, what are the opportunities, what are the challenges.”

In a piece Atalan co-wrote with CSIS’s Benjamin Jensen and Dan Tadross, cost, transparency and accessibility are among some of the biggest issues with wargaming.

Wargames can cost thousands and millions of dollars and are somewhat exclusive in that they don’t always take into account the perspectives of qualified players.

“The games affect the quality of the players, but the best players are often too careful when they move,” the CSIS report said. “Instead of relying directly on human players sitting around a table to play a game, the twenty-first century analyst can use AI and generative LLMs to create game agents.”

Speaking to BI about the opportunities available with artificial intelligence, Atalan said that AI could help players, with the right hints and data cure, by providing “different perspectives from different allies” and probability distribution and data points provide another in a wargame.

Wargaming is often misunderstood as a tool for predicting outcomes, but Atalan, along with other experts, emphasize that wargaming is not about that.

“It’s up to the players, the different teams, the interested parties, to see what their policy options are, what the opportunities and challenges might be,” he said.

The US military began to place more emphasis on the need for wargaming in 2015 and over the years, along with the hype, interest in the possibilities of AI has grown. In February 2023, for example, the US military successfully let AI pilot a fighter jet and engage in simulated air-to-air combat.

There is a growing realization that both interests could be combined to leverage the potential of AI.

“Wargaming and simulation remain critical tools for decision-makers in Defence,” concluded a 2023 study by the London-based Alan Turing Institute. “They can be used to train personnel for future conflict, and offer insights into critical decisions in warfighting, peace negotiations, arms control, and emergency response.”

The study also found that using AI for warfare could reduce the number of players needed, speed up games, produce new strategies, better immerse players in games, and complete games to speed up.

AI has limitations in this space as well. A recent RAND study found that AI may not work as well in a wargame if a game’s digital infrastructure is limited or if AI cannot directly interact with the models and computational simulations required.

Wargaming expert Ivanka Barzashka also raised concerns that AI could hide explanations for actions, which could lead to flawed conclusions.

“The current landscape of human-centric wargaming, combined with AI algorithms, faces a significant “black box” challenge where the reasoning behind certain results is unclear,” she wrote in a post for The Bulletin of the Atomic Scientists.

Barzashka argued that “this ambiguity, along with potential biases in AI training data and wargame design, underscores the urgent need for ethical governance and accountability in this evolving field.”

Currently, Atalan told BI, it is difficult for AI to participate alone in wargaming because it cannot do “strategic reasoning”, which could take into account different schools of thought.

Organizations like OpenAI are preparing to create “autonomous agents” that can perform tasks on their own, but Atalan said the current version of wargaming agents is only able to offer the most likely answers based on average data composition. and the internet.

Atalan said he would want to be “cautious” with autonomous agents because AI can inherit biases and prejudices depending on the Large Language Models or LLMs it is trained on.

“When people are using these LLMs in their approach, they need to be transparent, they need to show their tips,” said Atalan. “With experience and new studies we will see what kinds of normative biases these models have for certain actors and also in different languages.”

“It’s impossible to replace people,” Atalan said.

“People are still at the heart of the whole process,” he said, “but the AI assistance can be really useful in the whole thought process and the decision-making processes for the experts.”

Read the original article on Business Insider